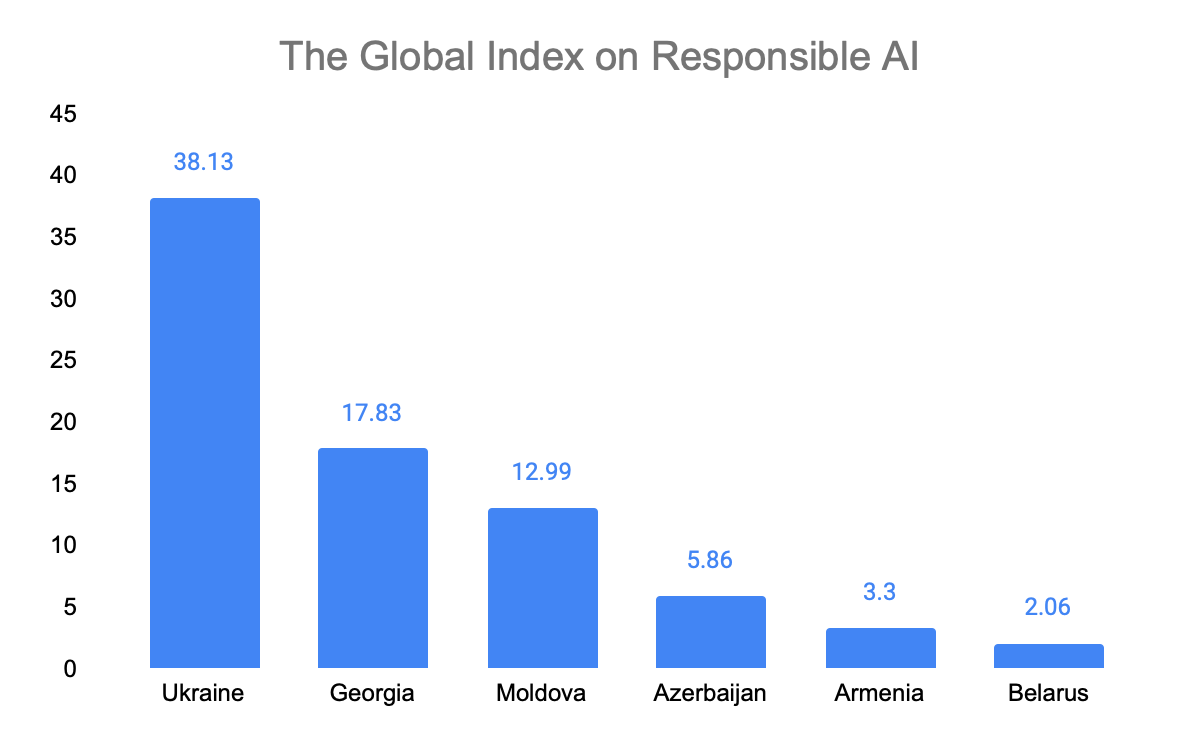

In June 2024, the Global Center on AI Governance released the first edition of the Global Index on Responsible AI (GIRAI). GIRAI represents the first tool to establish and assess globally-relevant benchmarks for responsible AI across various countries. This study marks the most extensive data collection on responsible AI conducted worldwide. In its first edition, the Global Index on Responsible AI evaluates 138 countries, with Georgia receiving a score of 17.83 out of 100 points and ranking 60th.

Short Overview of the Global Index on Responsible AI

The research defines Responsible AI as follows: Responsible AI refers to the design, development, deployment and governance of AI in a way that respects and protects all human rights and upholds the principles of AI ethics through every stage of the AI lifecycle and value chain. It requires all actors involved in the national AI ecosystem to take responsibility for the human, social and environmental impacts of their decisions.

The Global Index on Responsible AI measures 19 thematic areas of responsible AI, which are clustered into 3 dimensions:

- Human Rights and AI

- Responsible AI Governance

- Responsible AI Capacities

Each thematic area assesses the performance of 3 different pillars of the responsible AI ecosystem:

- Government frameworks

- Government actions

- Non-state actors’ initiatives

The study is based on primary data gathered and analyzed by country researchers in 138 countries. The governmental and non-governmental initiatives featured in the study were implemented between November 2021 and November 2023.

As part of the research, the Global Center on AI Governance collaborated with 16 regional hubs worldwide, including the Institute for Development of Freedom of Information (IDFI), which was responsible for Eastern Europe and Central Asia, covering 12 countries. Representatives from IDFI were directly involved in refining and piloting the research methodology.

Global Findings and Tendencies

AI governance does not translate into responsible AI

In the countries evaluated, the National AI Strategy often serves as the primary, if not the sole, document addressing AI usage. Specifically, 39% of the countries included in the study possess a national AI strategy that outlines the development, use, and management of AI at the national level. However, very few of these strategies incorporate the principles of responsible AI.

Mechanisms ensuring the protection of human rights in the context of AI are limited

The study revealed that few countries have implemented mechanisms to protect against AI-related risks. These mechanisms include the AI Impact Assessment, found in 43 countries, which evaluates the actual and potential threats posed by AI; remedy and redress mechanisms, present in 35 countries; and public procurement guidelines that regulate the acquisition and use of AI by the public sector.

International cooperation is an important cornerstone of current responsible AI practices

Globally, across various regions, the thematic area of international cooperation received the highest score. A key international document that all 138 countries participating in the study have adhered to is the "UNESCO Recommendations on the Ethics of Artificial Intelligence."

Gender equality remains a critical gap in efforts to advance responsible AI

Gender equality in AI emerged as one of the thematic areas with the lowest score in the study. The research revealed that only 24 countries have government frameworks addressing this topic. Additionally, governments in 37 countries have launched initiatives focused on gender equality and AI. Meanwhile, the non-governmental sector, predominantly consisting of academia and civil society, has implemented initiatives in this area in 67 countries.

Key issues of inclusion and equality in AI are not being addressed

Thematic areas concerning marginalized or underrepresented groups received notably low scores, underscoring that governments are not sufficiently addressing inclusivity and equality in the realm of artificial intelligence.

Workers in AI economies are not adequately protected

Only 33 of the countries included in the study had government frameworks regulating Labor

Protections and the Right to Work in the context of artificial intelligence. In this regard, the regions of Europe and the Middle East demonstrated relatively good results.

Responsible AI must incorporate cultural and linguistic diversity

Cultural and linguistic diversity in artificial intelligence is crucial for promoting diversity, preserving cultural heritage, and supporting low resourced languages. However, according to the research results, this thematic area received one of the lowest scores.

An interesting initiative identified in this area is Woolaroo, a research project by the Ministry of Culture of Mexico, which aims to preserve Mexico's endangered local languages using machine learning.

There are major gaps in ensuring the safety, security and reliability of AI systems

Only 28% of the countries assessed (38 countries) have taken effective measures to ensure the security of artificial intelligence systems. Furthermore, only 25% of the countries have binding government documents that regulate the technical security of AI and establish security standards.

Universities and civil society are playing crucial roles in advancing responsible AI

The study found that universities and civil society organizations are the most active entities in promoting responsible AI. This trend is consistent across all global regions. Representatives from these sectors frequently engage in activities related to thematic areas, such as gender equality and cultural and linguistic diversity, areas where other stakeholders often show less initiative.

Overview of Georgia’s Results

In the Global Index on Responsible AI Georgia scored 17.83 out of 100, ranking 60th out of 138 countries.

|

Index Score |

Pillar Score |

Direction Score |

||||

|

Government Frameworks |

Government Initiatives |

Non-government Initiatives |

Human Rights and AI |

Responsible AI Governance |

Responsible AI Capacities |

|

|

17.83 |

28.30 |

7.93 |

16.69 |

17.20 |

11.03 |

20.60 |

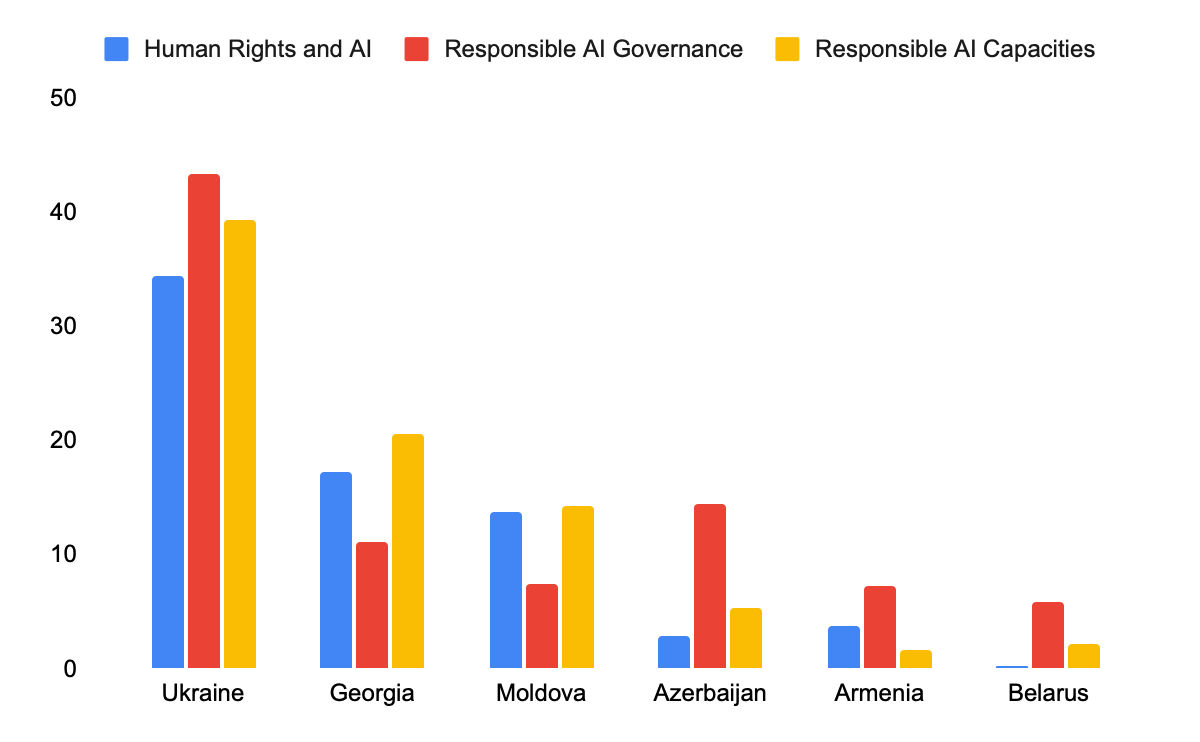

Georgia received the lowest scores in Responsible AI Governance, scoring 11.03 out of 100. The primary reason for this low rating is the absence of a national AI policy document. The only government document addressing some of the thematic areas in this direction (5 out of 9) is the Order of the President of the National Bank of Georgia on Approval of the Regulation about Data-driven Statistical, AI, and Machine Learning Model Risk Management. However, this order is limited to regulating the financial and banking sectors only.

Amid generally low global scores in the area of the right to access remedy and redress, the Law of Georgia on Personal Data Protection is significant in this regard. This law includes an article (Article 19) that delineates the right to contest the decision made by automated processing (AI). While the law does not explicitly mention artificial intelligence, referring instead to "automatic means," its broad nature encompasses AI along with other automated technologies. However, it's important to note that this law primarily addresses the protection of personal data and does not cover rectifying damages caused by AI systems.

Georgia achieved relatively better results in the direction of Human Rights and AI, primarily due to the active involvement of the non-governmental sector, including academia and civil society. This was notably significant given the lack of state-led initiatives in this area. Existing government documents on AI in Georgia address only 2 out of 7 thematic issues. The order of the President of the National Bank deals with issues of bias and discrimination, while the Personal Data Protection Law provides specific mechanisms for the protection of personal data and privacy.

Georgia also received a relatively high rating in Responsible AI Capacities. This rating is partly due to the country's adherence to the UNESCO Recommendations on the Ethics of Artificial Intelligence, which emphasize international cooperation, addressing one of the key areas in this direction.

The GIRAI study highlighted that in Georgia, the non-governmental sector, especially academia and civil organizations, is actively engaged in the development of responsible artificial intelligence. While there are numerous initiatives to create and use artificial intelligence within the private sector, these often overlook ethical AI principles. This oversight can largely be attributed to the lack of regulatory norms governing ethical considerations in AI development and application.

According to the study, government initiatives for AI in Georgia are notably scarce. In the context of the absence of strategic government documents related to AI, the order issued by the president of the National Bank stands out. This order, directed specifically at the financial and banking sectors, focuses on the ethical use of artificial intelligence.

The Comparison of Georgia's Results with Eastern European Countries

Ukraine stands out in the Eastern European region for its proactive approach to artificial intelligence. It is the only country in the region with a national policy document dedicated to AI—the "Concept of Development of AI in Ukraine." This document outlines the priority areas for AI development in the country and sets ethical standards for human rights protection. Additionally, the active participation of Ukraine's non-governmental sector in promoting responsible AI further strengthens the country's profile. Thanks to these government initiatives and the involvement of civil society, Ukraine has received positive evaluations in the study.

Georgia, while trailing behind Ukraine in all pillars and directions of the study, still performs notably within the Eastern European region. Except for the area of Responsible AI governance, where Azerbaijan surpasses Georgia by 3.46 points, Georgia ranks second among the countries evaluated in the region (note that Turkey and Russia were not included in the study).

Conclusion

The Global Index on Responsible AI was published for the first time this year, providing countries with a tool to evaluate how effectively they manage the social, economic, and other risks associated with new technologies, while simultaneously promoting innovation. This initiative marks a significant step in guiding global efforts to ensure that AI development aligns with ethical standards and responsible practices.

The results for Georgia indicate the need for a unified vision and consistent approaches within the country, both in terms of promoting AI system development and adhering to ethical standards during their use. It is also crucial to ensure the equal involvement of all stakeholders and to pay attention to international standards and principles for the responsible use of AI. This comprehensive approach will help Georgia advance its AI capabilities while safeguarding ethical and societal norms.

The study will be published annually until 2030, providing countries with ongoing opportunities to enhance their approaches and adopt good practices. This regular publication will enable countries to track their progress over time and align their strategies with international standards, facilitating continuous improvement in the responsible use of artificial intelligence.

See the complete version of the study and the global report.